A Novel Low-Cost Adaptive Scanner Concept for Mobile Robots

Un nuevo concepto en escáner adaptativo de bajo costo para robots móviles

DOI:

https://doi.org/10.15446/ing.investig.v34n3.41585Keywords:

Range finder, 3D scanner, mobile robot, structured light, laser, projector (en)telémetro, escáner 3D, robot móvil, luz estructurada, laser, proyector (es)

A fundamental problem in mobile robot applications is the need for accurate knowledge of the position of a vehicle for localizing itself and for avoiding obstacles in its path. In the search for a solution to this problem, researchers and engineers have developed different sensors, systems and techniques. Modern mobile robots relay information obtained from a variety of sensors and sophisticated data fusion algorithms. In this paper, a novel concept for a low-cost adaptive scanner based on a projected light pattern is proposed. The main advantage of the proposed system is its adaptivity, which enables the rapid scanning of the robot's surroundings in search of obstacles and a more detailed scan of a single object to retrieve its surface configuration and perform some limited analyses. This paper addresses the concept behind such a scanner, where a proof-of-concept is achieved using an office DLP projector. During the measurements, the accuracy of the proposed system was tested on obstacles and objects with known configurations. The obtained results are presented and analyzed, and conclusions about the system's performance and possible improvements are discussed.

El problema fundamental en las aplicaciones de robots móviles, es la necesidad de conocer con exactitud la posición del vehículo, para poder localizarse en el espacio y evitar obstáculos en su camino. En la búsqueda de una solución, los investigadores e ingenieros han desarrollado diferentes sensores, sistemas y técnicas.

Los robots móviles modernos se basan en la información obtenida de diferentes sensores y en sofisticados algoritmos de fusión de datos. Por tal razón, en este artículo se propone un nuevo concepto de escáner de adaptación a bajo costo, basándose en patrones de luz proyectados. La ventaja principal del sistema propuesto es: su adaptabilidad, que permite en los robots el escaneo rápido de los alrededores durante la búsqueda de obstáculos y una exploración más detallada de un objeto determinado, para poder recuperar así, su configuración de la superficie y realizar algunos análisis limitados. El artículo aborda el concepto de un escáner de este tipo, donde se logró la prueba del concepto utilizando un proyector de oficina DLP. Durante las mediciones, la exactitud del sistema propuesto se puso a prueba, usando obstáculos con objetos de configuraciones conocidas. De esta manera, los resultados obtenidos son presentados, analizados y se discuten las conclusiones sobre el desempeño del sistema para generar posibles mejoramientos.

DOI: https://doi.org/10.15446/ing.investig.v34n3.41585

I. Stanèiæ1, J. Musiæ2 and M. Ceciæ3

1Ivo Stanèiæ. Electrical engineer, Phd. Affilliation: Research Assistant, Faculty of Electrical Engineering, Mechanical Engineering and Naval Architecture, Univeristy of Split, Croatia. E-mail: istancic@fesb.hr

2Josip Musiæ. Electrical engineer, Phd. Affilliation: Research Assistant, Faculty of Electrical Engineering, Mechanical Engineering and Naval Architecture, Univeristy of Split, Croatia. E-mail: jmusic@fesb.hr

3Mojmil Ceciæ. Electrical engineer, Phd. Affilliation: Full Professor, Faculty of Electrical Engineering, Mechanical Engineering and Naval Architecture, Univeristy of Split, Croatia. E-mail: mcecic@fesb.hr

How to cite: Stancic, I., Music, J., & Cecic, M. (2014). A Novel Low-Cost Adaptive Scanner Concept for Mobile Robots. Ingeniería e Investigación, 34(3), 37-43.

ABSTRACT

A fundamental problem in mobile robot applications is the need for accurate knowledge of the position of a vehicle for localizing itself and for avoiding obstacles in its path. In the search for a solution to this problem, researchers and engineers have developed different sensors, systems and techniques. Modern mobile robots relay information obtained from a variety of sensors and sophisticated data fusion algorithms. In this paper, a novel concept for a low-cost adaptive scanner based on a projected light pattern is proposed. The main advantage of the proposed system is its adaptivity, which enables the rapid scanning of the robot's surroundings in search of obstacles and a more detailed scan of a single object to retrieve its surface configuration and perform some limited analyses. This paper addresses the concept behind such a scanner, where a proof-of-concept is achieved using an office DLP projector. During the measurements, the accuracy of the proposed system was tested on obstacles and objects with known configurations. The obtained results are presented and analyzed, and conclusions about the system's performance and possible improvements are discussed.

Keywords: Range finder, 3D scanner, mobile robot, structured light, laser, projector.

RESUMEN

El problema fundamental en las aplicaciones de robots móviles, es la necesidad de conocer con exactitud la posición del vehículo, para poder localizarse en el espacio y evitar obstáculos en su camino. En la búsqueda de una solución, los investigadores e ingenieros han desarrollado diferentes sensores, sistemas y técnicas.

Los robots móviles modernos se basan en la información obtenida de diferentes sensores y en sofisticados algoritmos de fusión de datos. Por tal razón, en este artículo se propone un nuevo concepto de escáner de adaptación a bajo costo, basándose en patrones de luz proyectados. La ventaja principal del sistema propuesto es: su adaptabilidad, que permite en los robots el escaneo rápido de los alrededores durante la búsqueda de obstáculos y una exploración más detallada de un objeto determinado, para poder recuperar así, su configuración de la superficie y realizar algunos análisis limitados.

El artículo aborda el concepto de un escáner de este tipo, donde se logró la prueba del concepto utilizando un proyector de oficina DLP. Durante las mediciones, la exactitud del sistema propuesto se puso a prueba, usando obstáculos con objetos de configuraciones conocidas. De esta manera, los resultados obtenidos son presentados, analizados y se discuten las conclusiones sobre el desempeño del sistema para generar posibles mejoramientos.

Palabras clave: telémetro, escáner 3D, robot móvil, luz estructurada, laser y proyector.

Received: January 15th 2014 Accepted: May 30th 2014

Introduction

A fundamental problem in mobile robot applications is the need for accurate knowledge of the position of a vehicle with respect to its surroundings (Borenstein, 1997; Kucsera, 2006). Obtaining accurate information about a robot's environment in a fast and reliable manner is an essential step in the development of successful navigation systems for robots. Current systems usually employ a variety of strategically placed sensors or are guided by an operator (Abu Dalhoum, 2008). Designing autonomous mobile robots requires the integration of many sensors and actuators, a task that requires many compromises (Aufrere, 2003; Fotiadis, 2013; Liu, 2012; Perrollaz, 2006). Thus, mobile robots are provided with one or more perception systems, whose sole function is to detect surrounding objects, to avoid possible collisions and to avoid situations of potential risk during navigation. A single sensor is unable to adequately and reliably capture all objects in a robot's vicinity. To overcome this problem, it is necessary to combine data from different sensors, a process known as sensor (data) fusion (Fotiadis, 2013; Perrollaz, 2006).

Mobile robots' sensors, according to their functionality, can be generally divided into two groups (each of which can be further subdivided):

- Obstacle avoidance sensors, which sense dynamic or static obstacles in the robot's vicinity and allow the robot to avoid collisions.

- Localization sensors, which collect data to determine an accurate position of the robot for navigational purposes.

Localization sensors that enable robot navigation are commonly divided into two subgroups: relative position and absolute position sensors (Borenstein, 1997). Odometer and internal navigating systems, which are the most common relative position measuring systems, are capable of measuring the displacements of mobile robots but are unable to detect surrounding obstacles. The same principle has been applied to other absolute measuring systems, such as active beacons (or GPS for outdoor use). These systems are solely used in limited environments, where an accurate map of an area and localization are sufficient for navigating mobile robots. Mobile robots can also use landmarks (distinct features) for improving localization accuracy.

Obstacle detection is a primary requirement for any autonomous robot (Darms, 2009). Various types of sensors can be used for obstacle detection. Commonly used sensing devices for obstacle detection include contact sensors, infrared sensors, ultrasonic sensors, laser range finders and vision systems (Fayad, 2007; Sungbok, 2010). In contrast to contact sensors, ranging sensors require no physical contact with the object being detected. They allow a robot to detect an obstacle without having to come into contact with it. Ranging sensors that collect information from the surrounding world can be generally divided into two subgroups according to their operating principle (Kucsera, 2006). Time-of-flight (TOF) sensors make use of the propagation speed of an emitted signal and measure the traveling time or phase shifts between transmitted and detected signals. The transmitted signal can be sound, light or radar waves. An ultrasonic sensor's basic principle is to transmit ultrasonic waves, generated by a piezo transducer, and to measure the time the signal takes to return to the receiver. The main disadvantage of ultrasonic sensors is that while they can determine if there is an obstacle in an area, they cannot provide any additional information about the detected obstacle. A set of ultrasonic sensors are commonly installed at regular intervals along the sides of mobile robots (Sungbok, 2010). If more than one sensor is used, interference needs to be avoided, which increases scanning time. The same principle is applied when other types of TOF sensors are used, where the transmitted wave determines the sensors' minimum and maximum range, resolution and speed. Sensors that utilize simple geometric principles (Hartley, 2003; Shapiro, 2001) are members of a second subgroup, which include multi-camera systems and various laser scanners. The most commonly used sensor is a camera that is sensitive to either visible or infrared light in a variety of setups, such as monocular or stereo vision. The basic principle is similar to the principle governing human vision (Hartley, 2003). Two downsides of this method are that it is very complex and calculation intensive. In general, robots' embedded computers do not have sufficient computing power to fully benefit from vision systems. Constraints on the on-board computational power, because of the need for real-time processing, make this problem especially demanding. In contrast, if simple sensors or sensors that output simple analog or digital signals are used, guidance by a relatively simple onboard computer can be achieved. Kumari et al. (2012) created a mobile robot that can navigate in a building without the need for human intervention. Signals received from infrared sensors are analyzed by an Atmega 32 microcontroller, which controls actuators and guides the robot through an environment. In contrast to systems that are designed for indoor use, mobile robots that are primarily used outdoors, where a more dynamic environment is expected, require more complex sensors that output complex signals. Real-time vision systems used in UGVs (Unmanned Ground Vehicles (Fisher 2013)) are constrained to lower resolutions because of their limited processing power and low payload capacity.

In contrast to vision systems, laser scanners offer simpler calculations of an object's location. Most common laser-based devices use laser beams pointed toward rotating mirrors, where fast scanning can be accomplished in the vertical direction, but the entire scanner has to be able to move horizontally if 3D scanning is required. Because the number of points measured is relatively low, detection based solely on laser data may be unreliable (Fotiadis, 2013). An additional drawback of laser-based scanners is that in normal daylight, the system cannot distinguish the reflected laser beam from the surrounding sunlight (Kucsera, 2006). This drawback can be addressed by using higher power lasers and optical filters at the wavelength of the utilized laser. The laser ranging system typical covers an area of a half-circle with a 50-m radius for large objects (more than 1 m as viewed from the scanner) (Mertz, 2013). The CMU-RI Navlab group has developed such a system that uses a laser scanner as its primary sensor. (Mertz, 2013). The MIT Urban Grand Challenge team has made their data available (Huang, 2010). Their vehicle had a Velodyne, five cameras, and 12 SICK laser scanners. Camera vision systems generally provide more information than do laser systems and include information about an object's texture and shape, which makes them ideal for object recognition (Fotiadis, 2013).

There are three well-known reasons as to why multiple sensors are installed on mobile robots (Borenstein, 1997; Kucsera, 2006). The first reason is that one sensor alone cannot cover the entire area of interest. The second reason is that the combination of sensors with different properties achieves greater robustness and higher quality detection results that cannot be achieved with one type of sensor. These properties include different ranges, resolutions, update rates, and even different sensing modalities. The third reason is redundancy. A fast obstacle detection algorithm for mobile robots based on the fusion of a vision sensor and an ultrasonic sensor was proposed by Liu et al (Liu, 2012). The distance between the robot and the rectangular obstacle is obtained by the ultrasonic sensor located on the head of the robot. The purpose of the described algorithm is local path planning for a hexapod robot. Using obstacle detection sensors in systems that generally operate in closed and well-known environment is a useful improvement, especially when the robot has to avoid humans, animals or other types of obstacle. In recent years, great effort has been made toward developing systems for pedestrian detection and avoidance (Fuerstenberg, 2005; Gandhi, 2007; Navarro-Serment, 2008; Premebida, 2009). Small and low-cost mobile robots are limited in terms of the selection of sensors. The complexity and high processing power requirements of stereo vision systems (or any vision system) precludes the use of such systems in robots with limited payloads. Industry-grade laser scanners (SICK) (Cang, 2002) are in some cases more expensive than the robot itself or are too bulky to be efficiently utilized. The recent development of the Microsoft Kinect system, whose primary function is as a game console input device, inspired researchers to use it as a robot navigation device. Correa et al. (Correa, 2002) successfully implemented the Microsoft Kinect as a sensor for the indoor navigation of an autonomous surveillance mobile robot. However, it does not currently have adaptive capabilities, which could reduce costs, especially for smaller robots. Based on the literature review, it is evident that a simple and cost-effective solution is required, which would combine properties of fast obstacle detection sensors and high-resolution sensors for recognizing objects in a scene. This article addresses the analysis and proof of such a concept.

3D Scanner System

In this paper, a simple and low-cost laser range finder with 3D scanning capabilities is proposed. The system should enable mobile robots to detect obstacles in their vicinity and, when required, to obtain detailed surface scans of a single object to analyze various properties such as shape and size. A detailed surface scan might be required in situations where a robot has to distinguish between humans or other living objects in its workspace to avoid collisions and to predict its trajectory.

The adaptability of the proposed scanner system is achieved by allowing the scanner to project multiple patterns at the target area. A longer scanning time with more than one projected pattern enables a more detailed scan, which enables limited object recognition, while short scanning times (a single pattern is projected) allows the scanner to detect obstacles without any detail analysis. A more detailed description of the adaptability of the system is contained in the following subsections.

Materials and methods

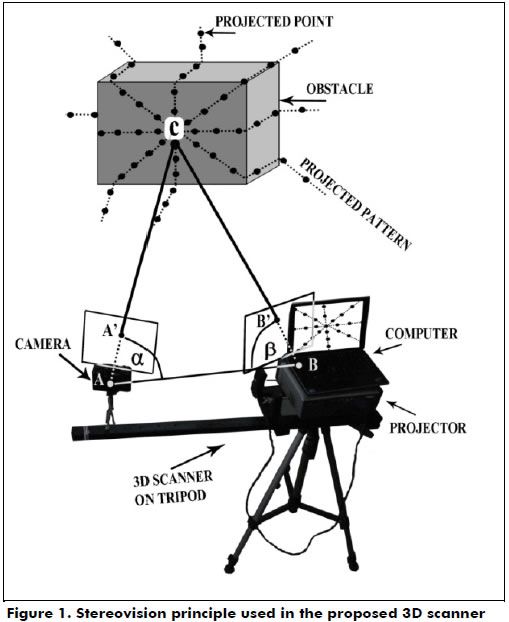

The proposed concept of an adaptive scanner finder was tested in a laboratory with an off-the shelf DLP (Digital Light Processing) projector as a main component (Figure 1). The DLP projector projects light patterns as would a laser projector. The DLP projector paired with a compact digital camera creates a simple stereovision system (Rocchini, 2001), where the projector is the active component and the camera is the passive one. The same stereovision principle is implemented in human vision to detect a range map of the surrounding world (Hartley, 2003). The video projector used was an Optoma EP739 DLP, projecting video at a resolution of 1024 x 768 pixels at a frame rate of 60 Hz, while the Cannon G9 digital camera was recording at a resolution of 640 x 480 pixels at a frame rate 60 Hz. The described components are to be used in proof-of-concept tests, while the completed system would include a laser light source instead of a projector and a high-speed camera, enabling scans to be executed in a matter of a few milliseconds.

Stereovision system and calibration: If a single light ray is projected from a projector (denoted as B in Figure 1), it passes through the projector frame (denoted as point B'), and it hits the target at point C. The reflected light ray is captured by the camera at its plane (denoted as point A'). The pixel on the camera plane (A') and the pixel on the projector plane (B') correspond to an angle between the camera and object (α) and an angle between the projector and object (β). If the exact position of the camera and projector in the world coordinate frame is known, the problem of reconstructing the exact position of point C is reduced to a simple triangulation problem.

Calibration is a mandatory requirement of any multi-camera vision system (Heikkila, 1997; Tsai, 1987; Zhang, 1999; Zollner, 2004). It is used for the calculation of the relations between cameras and scenes, thus enabling the simple reconstruction of objects if their locations on the camera and projector planes are known (A' and B'). The measurement procedure is initiated with a calibration step, which is performed once when the camera and projector are placed at the desired locations with respect to each other. The outputs of the calibration process are the camera and projector matrices Pc and Pp, respectively, which contain orientation and position information in the coordinate frame defined by the calibration object. An extrinsic part of the calibration procedure requires the scanning of a scene with a well-known configuration (covering most of the system's field of view). Given a set of corresponding pixel pairs, system calibration is achieved using an approach proposed by Heikkila (Heikkila, 1997). To achieve better calibration results and to minimize possible errors due to manual key point selection, a certain level of redundancy was built into the calibration process through the use of nine calibration points, denoted as 1-9 (Figure 2), with five objects present in the scene. The intrinsic part of the calibration was not performed because lens distortion from both the projector and camera were found to be minimal. Using a Direct Linear Transformation (DLT), the coordinates for each point on the scanned surface are calculated (Abdel-Aziz, 1971). The position of point X in the reference coordinate frame is derived using equations (1) and (2), where Xc and Xp are its coordinates in the camera and projector planes, respectively; Pc and Pp are the camera and projector matrices, respectively; τ is a triangulation function; and H is the linear transformation that transforms Xc = HXp (Hartley, 2003).

Projected patterns: The projection must contain as many points as possible but be arranged in such a way that any point from the pattern can be uniquely identified during a scan. Instead of projecting a single point onto the target area, the proposed system projects a pattern containing 49 points in a star configuration. The proposed configuration consists of 6 star segments (arms) with 8 points and one central point (49 points in total). A total of 8 points per segment was selected considering the camera's resolution (480 vertical lines), allowing at least 2 pixels of vertical distance between projected points in the full scan pattern. A higher number of points in the star segment would not dramatically increase the system's detection accuracy, while a lower number of points would decrease the scanner's total resolution. A total of six star segments was the maximum number of segments that would not project neighboring points too close to each other. A more advanced camera (in terms of resolution) would allow for a larger number of points in the star configuration. Several other pattern configurations were tested but with less promising results in terms of the trade-off between the algorithm's complexity (point recognition and racking) and the area coverage. Thus, the proposed configuration was considered to be optimal in that sense. By projecting multiple points at the target area, the system is able to scan the desired area in a single frame (if the camera and projector are synchronized). By aligning points in the form of dotted lines, identifying each point from the camera image is made easier. A simple prediction algorithm based on pattern dynamics is implemented and predicts the location of a point in the next scanning frame, thus minimizing errors when points overlap or are not visible in some frames.

In a single projected pattern (Figure 3, left), points are arranged (the sides of the dotted lines are shifted) in such a way that when the matrix rotates, the points do not overlap previous projections. One full scan cycle (Figure 3, right) consists of several single projected patterns. The center of a projected pattern is fitted with more points, resulting in a higher resolution scan at the center and a lower resolution scan at the projection edges. The final result of the 3D structured light scanning process is a "point cloud" that represents a scanned surface.

Experimental Measurement

The system was tested in two scenarios, both of which could occur during normal operation. A set of five rectangular objects with known dimensions are arranged similar to what is shown in Figure 2. A set of nine small markers is placed in the scene and are denoted as 1-9 in Figure 2. The locations of the markers were measured with a precision caliper (0.2 mm) and are used in the calibration process. The object dimensions were also measured with a caliper, which was used in later accuracy trials. The minimum allowed distance of objects from the experimental scanner was 20 cm, while the maximum distance was constrained by the light intensity of the projected patterns (up to 5 m under our laboratory conditions). In the experiments, the measurements were performed with a scanner at a distance of 150 cm from the scanned objects.

After calibration, a partial scan was executed (with only one projected frame), after which a full scan was executed (full cycle of 10 projected frames). Each projected and detected point was paired, from which a point cloud was derived. Using a simple image interpolation method (2D interpolation) and data from each point, a simple depth map was constructed. The projector and camera were set to operate at 60 Hz. Because they were not synchronized, the resulting scanner frame rate was lowered to 30 Hz. Because a full scan cycle contains 10 frames, a cycle is completed in 330 ms (33 ms per projected frame).

Results and Discussion

The results are derived from the reconstructed depth map obtained in the previous step, where partial and full scan cycles were independently analyzed. The depth map of the original scene (object formation from Figure 2) is shown in Figure 4a. The letter tags A-F mark a single object on the scene, while the circle shows the area covered by the projected patterns. All the calculations and the graphical presentation were performed using Matlab 2010. The resulting depth map of one full scan cycle is shown in Figure 4b, which contains all objects from the original scene in terms of sizes and shapes that resemble the object in the original configuration. Using only the depth map from Figure 4b, simple calculations of the objects' sizes and types could be performed.

The depth map reconstructed from a partial scan is shown in Figure 4c. Nearby objects are detected, and the distance from them is calculated, but no other useful information about the object shape and size can be extracted from the depth map. When the partial scan is performed, it is possible that some smaller objects are not detected by the scanner in the current frame. As shown in Figure 4c, object C is not detected, which is because the object is not covered with the pattern in the current projection frame. The rotation of the projected pattern in the next frames enables the detection of a previously undetected object. The introduction of a high-speed camera (and laser projector) enables shorter scanning times (less than 33 ms per frame), which minimizes the possibility of an obstacle not being detected during navigation.

When increasing the number of points in the projected pattern (full scan), the resulting depth map is more similar to the ideal depth map (Figure 4a), and some limited analyses of the objects' characteristics are feasible. Table 1 shows the results of the depth reconstruction for the full scan cycle for all five objects, denoted as objects A, B, C, D, E, and the reference background, denoted as F. The last row (Table 1) shows the results for all objects (all points detected on objects). One can conclude that the system's depth reconstruction performs with a mean error of 1.5 mm and an RMSE better than 1 cm, which is more than adequate for its intended application.

The accuracy of the proposed system could be improved by implementing a sub-pixel point center detection algorithm (Ling, 2005; Stancic, Grujic, 2013), while including more points in the projected pattern would increase the system's final resolution. To summarize, the only difference between the partial and full scans is the number of points projected onto the area, and thus, the resolution of the scan is different.

Future developments

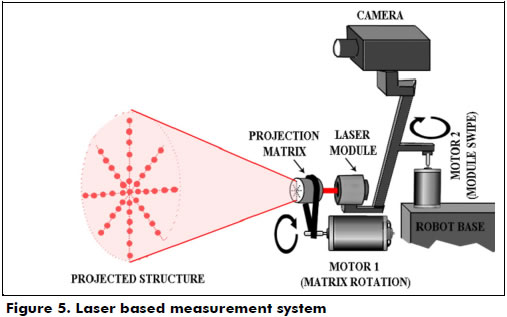

The final system should use a laser projector and a high-speed camera (Figure 5). A single source laser beam would pass through a matrix that scatters light in a desired configuration. Motor 1 would rotate the laser matrix with a controlled speed, thus controlling the system's scanning speed. A slower rotation means a higher resolution scan at the cost of longer scanning times. This scan setting is useful for the recognition of objects. Increasing the matrix rotation speed results in a lower resolution scan, which can be completed quickly. An evident drawback of this scan setting is the inability to recognize and analyze the object's properties. A small alteration of the scanner's field of view can be achieved by modifying the laser based on the matrix's distance, thus enabling an even higher density of projected points at the desired area. Finally, by increasing the rotation speed with the largest FOV (field of view), a mobile robot can only obtain information about the obstacles present in its vicinity, even if the robot is on the move. By pointing the scanner module at a single target and by reducing the scanning speed (Motor 1 rotation speed), a scanned object could be detected with more points, thus enabling object recognition and analysis.

The scanner module is intended to be placed on a rotating platform, which enables the scanner to horizontally scan the area. The algorithm for scene reconstruction has to be modified in a way that considers the rotation of the scanner module relative to the robot's main body and the displacement of the robot using odometry or a similar technique. As shown in (Stancic, Music, 2013), vibrations slightly affect a scanner's accuracy, but this can be partially compensated for by introducing an accelerometer in the scanner module. This improvement is planned as a component of robotic aids for blind and low-vision persons.

Conclusions

Information about the exact position of a robot in a coordinate system and sensing obstacles in its vicinity is a fundamental requirement for successful mobile robot navigation. To this end, modern mobile robots relay information obtained from multiple sensors or are guided by an operator. Physically small or low-cost robots do not contain advanced scanning sensors or vision systems. Therefore, a novel concept for a low-cost, adaptive 3D scanner and range finder was proposed in this paper. The scanner is capable of working in two modes: fast scanning, which is used for detecting nearby obstacles and measuring distances to obstacles, and slow scanning, which is useful when a more detailed scan of a single object is required. When a high-resolution scan of static objects is needed, the robot may be required to stop, while working in obstacle detection mode, the system compensates for the robot's motion. The accuracy of both modes is similar, and longer scan times only provide higher resolution and consequently the capability of limited object analyses. A partial scan covers an area with 49 points, while a full scan covers the scanned area with up to 490 points. The mean error for the depth map reconstruction was 1.52 mm, with an RMSE below 1 cm, which is more than adequate for its intended application. The inclusion of a laser projector instead of a DLP projector and a high-speed camera instead of a compact digital camera would greatly increase the system's operating speed.

References

Abdel-Aziz, Y. I., & Karara, H. M. (1971). Direct linear transformation from comparator coordinates into object space coordinates in close-range photogrammetry. Paper presented at Symposium on Close-Range Photogrammetry, Urbana-Champaign campus, American Society of Photogrammetry.

Abu Dalhoum, A. L., Al-Rawi, M., Al -Sharieh, A., Najjar, A. M., Najjar, M. M., & Shayreh, S. (2008). Remotely Controller Intelligent Vehicle to Handle Public Places Security. WSEAS Transactions on Systems, 7(10), 1058-1069.

Aufrere, R., Mertz, C., & Thorpe, C. (2003). Multiple sensor fusion for detecting location of curbs, walls, and barriers. Paper presented at Intelligent Vehicles Symposium, Columbus, IEEE.

Borenstein, J., Everett, H. R., Feng, L., & Wehe, D. (1997). Mobile Robot Positioning Sensors and Techniques. Journal of Robotic Systems, 14(4), 231-249.

Cang Y., & Borenstein, J. (2002, May). Characterization of a 2-D Laser Scanner for Mobile Robot Obstacle Negotiation. Paper presented at International Conference on Robotics and Automation, Washington D. C., IEEE.

Correra, D. S. O., Scotti, D. F., Prado, M. G., & Sales, D. F. (2012). Mobile Robots Navigation in Indoor Environments Using Kinect Sensor. Paper presented at Brazilian Conference on Critical Embedded Systems, Sao Paulo, IEEE.

Darms, M. S., Rybski, P., Baker, C., & Urmson, C. (2009). Obstacle detection and tracking for the urban challenge. Intelligent Transportation Systems, 10(3), 475-485.

Fayad, F., & Cherfaoui, V. (2007). Tracking objects using a laser scanner in driving situation based on modeling target shape. Paper presented at Intelligent Vehicles Symposium, Istanbul, IEEE.

Fotiadis, E. P., Garzón, M., & Barrientos, A. (2013). Human Detection from a Mobile Robot Using Fusion of Laser and Vision Information. Sensors, 13(9), 11603-11635.

Fisher, A. (2014). Inside Google's Quest to Popularize Self-Driving Cars. Popular Science. Retrieved from http://www.popsci.com/cars/article/2013-09/google-self-driving-car

Fuerstenberg, K. C. (2005). Pedestrian protection using laser scanners. Paper presented at Intelligent Transportation Systems Conference, Vienna, IEEE.

Gandhi, T., & Trivedi, M. M. (2007). Pedestrian protection systems: Issues, survey, and challenges. Intelligent Transportation Systems, 8(3), 413-430.

Hartley, R., & Zisserman, A. (2004). Multiple View Geometry in Computer Vision. Cambridge, UK: Cambridge University Press.

Heikkila, J., & Silven, O. (1997). A Four-step Camera Calibration Procedure with Implicit Image Correction. Paper presented at Conference on Computer Vision and Pattern Recognition, San Juan, IEEE Computer Society.

Huang, A. S., Antone, M., Olson, E., Moore, D., Fletcher, L., Teller, S., & Leonard, J. (2010). A high-rate, heterogeneous data set from the DARPA Urban Challenge. International Journal of Robotics Research, 29(13), 1595-1601.

Kucsera, P. (2006). Sensors for mobile robot systems. Academic and Applied Research in Military Science, 5(4), 645-658.

Kumari, C. L. (2012). Building Algorithm for Obstacle Detection and Avoidance, System for Wheeled Mobile Robot. Global Journal of Researches in Engineering(F): Electrical and Electronics Engineering, 12(11).

Ling, D., Hsu, H. Y., Lin, G. C. I., & Lee, S. H. (2005). Enhanced image-based coordinate measurement using a super-resolution method. Robotics and Computer-Integrated Manufacturing, 21(6), 579-588.

Liu, H-B., Dong, Y-J., Huangfu, C-H., & Wang F-Z. (2012). Obstacle detection algorithm for mobile robot based on multi-sensor. Paper presented at Chinese Control Conference, Hefei, Chinese Association of Automation.

Mertz, C., Navarro-Serment, L. E., MacLachlan, R., Rybski, P., Steinfeld, A., Suppe, A., Urmson, C., Vandapel, N., Hebert, M., & Thorpe, C. (2013). Moving Object Detection with Laser Scanners. Journal of Field Robotics, 30(1), 17-43.

Navarro-Serment, L. E., Mertz, C., Vandapel, N., & Hebert, M. (2008). LADAR-based Pedestrian Detection and Tracking. Paper presented at Workshop on Human Detection from Mobile Robot Platforms within International Conference on Robotics and Automation, Pasadena, IEEE.

Perrollaz, M., Labayrade, R., Royere, C., Hautiere, N., & Aubert, D. (2006). Long range obstacle detection using laser scanner and stereovision. Paper presented at Intelligent Vehicles Symposium, Tokyo, IEEE.

Premebida, C., Ludwig, O., & Nunes, U. (2009). LIDAR and Vision-Based Pedestrian Detection System. Journal of Field Robotics, 26(9), 696-711.

Rocchini, C., Cignoni, P., Montani, C., Pingi, P., & Scopigno, R. (2001). A low cost 3D scanner based on structured light. Computer Graphics Forum, 20(3), 299-308.

Shapiro, G., & Stockman, G. C. (2001). Computer vision. Upper Saddle River, NJ, USA: Prentice-Hall.

Stancic, I., Grujic, T., & Panjkota, A. (2013). Design, Development, and Evaluation of Optical Motion-Tracking System Based on Active White Light Markers. IET Science, Measurement & Tech-nology, 7(4), 206-214.

Stanèiæ, I., Musiæ, J., & Zanchi, V. (2013). Improved structured light 3D scanner with application to anthropometric parameter estimation. Measurement, 46(1), 716-726.

Sungbok, K., & Hyun, B. K. (2010). High Resolution Mobile Robot Obstacle Detection Using Low Directivity Ultrasonic Sensor Ring. Lecture Notes in Computer Science, 6216, 426-433.

Tsai, R. Y. (1987). A versatile camera calibration technique for high accuracy 3D machine vision metrology using off-the-shelf TV cameras and lenses. IEEE Journal of Robotics and Automation, RA-3(4), 323-344.

Zhang, Z. (1999). Flexible camera calibration by viewing a plane from unknown orientations. Paper presented at International Conference on Computer Vision, Kerkyra, IEEE.

Zollner, H., & Sablatnig, R. (2004). Comparison of methods for geometric camera calibration using planar calibration targets. Paper presented at Workshop of the Austrian Association for Pattern Recognition, Hagenberg, Austrian Association for Pattern Recognition.

Zubair, M., & Choudry, M. A. (2011). Land Mine Detecting Robot Capable of Path Planning. WSEAS Transactions on Systems and Control, 6(4), 105-114.

References

Abdel-Aziz, Y. I., & Karara, H. M. (1971). Direct linear transformation from comparator coordinates into object space coordinates in close-range photogrammetry. Paper presented at Symposium on Close-Range Photogrammetry, Urbana-Champaign campus, American Society of Photogrammetry.

Abu Dalhoum, A. L., Al-Rawi, M., Al -Sharieh, A., Najjar, A. M., Najjar, M. M., & Shayreh, S. (2008). Remotely Controller Intelligent Vehicle to Handle Public Places Security. WSEAS Transactions on Systems, 7(10), 1058-1069.

Aufrere, R., Mertz, C., & Thorpe, C. (2003). Multiple sensor fusion for detecting location of curbs, walls, and barriers. Paper presented at Intelligent Vehicles Symposium, Columbus, IEEE.

Borenstein, J., Everett, H. R., Feng, L., & Wehe, D. (1997). Mobile Robot Positioning Sensors and Techniques. Journal of Robotic Systems, 14(4), 231-249.

Cang Y., & Borenstein, J. (2002, May). Characterization of a 2-D Laser Scanner for Mobile Robot Obstacle Negotiation. Paper presented at International Conference on Robotics and Automation, Washington D. C., IEEE.

Correra, D. S. O., Scotti, D. F., Prado, M. G., & Sales, D. F. (2012). Mobile Robots Navigation in Indoor Environments Using Kinect Sensor. Paper presented at Brazilian Conference on Critical Embedded Systems, Sao Paulo, IEEE.

Darms, M. S., Rybski, P., Baker, C., & Urmson, C. (2009). Obstacle detection and tracking for the urban challenge. Intelligent Transportation Systems, 10(3), 475-485.

Fayad, F., & Cherfaoui, V. (2007). Tracking objects using a laser scanner in driving situation based on modeling target shape. Paper presented at Intelligent Vehicles Symposium, Istanbul, IEEE.

Fotiadis, E. P., Garzón, M., & Barrientos, A. (2013). Human Detection from a Mobile Robot Using Fusion of Laser and Vision Information. Sensors, 13(9), 11603-11635.

Fisher, A. (2014). Inside Google's Quest to Popularize Self-Driving Cars. Popular Science. Retrieved from http://www.popsci.com/cars/article/2013-09/google-self-driving-car

Fuerstenberg, K. C. (2005). Pedestrian protection using laser scanners. Paper presented at Intelligent Transportation Systems Conference, Vienna, IEEE.

Gandhi, T., & Trivedi, M. M. (2007). Pedestrian protection systems: Issues, survey, and challenges. Intelligent Transportation Systems, 8(3), 413-430.

Hartley, R., & Zisserman, A. (2004). Multiple View Geometry in Computer Vision. Cambridge, UK: Cambridge University Press.

Heikkila, J., & Silven, O. (1997). A Four-step Camera Calibration Procedure with Implicit Image Correction. Paper presented at Conference on Computer Vision and Pattern Recognition, San Juan, IEEE Computer Society.

Huang, A. S., Antone, M., Olson, E., Moore, D., Fletcher, L., Teller, S., & Leonard, J. (2010). A high-rate, heterogeneous data set from the DARPA Urban Challenge. International Journal of Robotics Research, 29(13), 1595-1601.

Kucsera, P. (2006). Sensors for mobile robot systems. Academic and Applied Research in Military Science, 5(4), 645-658.

Kumari, C. L. (2012). Building Algorithm for Obstacle Detection and Avoidance, System for Wheeled Mobile Robot. Global Journal of Researches in Engineering(F): Electrical and Electronics Engineering, 12(11).

Ling, D., Hsu, H. Y., Lin, G. C. I., & Lee, S. H. (2005). Enhanced image-based coordinate measurement using a super-resolution method. Robotics and Computer-Integrated Manufacturing, 21(6), 579-588.

Liu, H-B., Dong, Y-J., Huangfu, C-H., & Wang F-Z. (2012). Obstacle detection algorithm for mobile robot based on multi-sensor. Paper presented at Chinese Control Conference, Hefei, Chinese Association of Automation.

Mertz, C., Navarro-Serment, L. E., MacLachlan, R., Rybski, P., Steinfeld, A., Suppe, A., Urmson, C., Vandapel, N., Hebert, M., & Thorpe, C. (2013). Moving Object Detection with Laser Scanners. Journal of Field Robotics, 30(1), 17-43.

Navarro-Serment, L. E., Mertz, C., Vandapel, N., & Hebert, M. (2008). LADAR-based Pedestrian Detection and Tracking. Paper presented at Workshop on Human Detection from Mobile Robot Platforms within International Conference on Robotics and Automation, Pasadena, IEEE.

Perrollaz, M., Labayrade, R., Royere, C., Hautiere, N., & Aubert, D. (2006). Long range obstacle detection using laser scanner and stereovision. Paper presented at Intelligent Vehicles Symposium, Tokyo, IEEE.

Premebida, C., Ludwig, O., & Nunes, U. (2009). LIDAR and Vision-Based Pedestrian Detection System. Journal of Field Robotics, 26(9), 696-711.

Rocchini, C., Cignoni, P., Montani, C., Pingi, P., & Scopigno, R. (2001). A low cost 3D scanner based on structured light. Computer Graphics Forum, 20(3), 299-308.

Shapiro, G., & Stockman, G. C. (2001). Computer vision. Upper Saddle River, NJ, USA: Prentice-Hall.

Stancic, I., Grujic, T., & Panjkota, A. (2013). Design, Development, and Evaluation of Optical Motion-Tracking System Based on Active White Light Markers. IET Science, Measurement & Technology, 7(4), 206-214.

Stančię, I., Musię, J., & Zanchi, V. (2013). Improved structured light 3D scanner with application to anthropometric parameter estimation. Measurement, 46(1), 716-726.

Sungbok, K., & Hyun, B. K. (2010). High Resolution Mobile Robot Obstacle Detection Using Low Directivity Ultrasonic Sensor Ring. Lecture Notes in Computer Science, 6216, 426-433.

Tsai, R. Y. (1987). A versatile camera calibration technique for high accuracy 3D machine vision metrology using off-the-shelf TV cameras and lenses. IEEE Journal of Robotics and Automation, RA-3(4), 323-344.

Zhang, Z. (1999). Flexible camera calibration by viewing a plane from unknown orientations. Paper presented at International Conference on Computer Vision, Kerkyra, IEEE.

Zollner, H., & Sablatnig, R. (2004). Comparison of methods for geometric camera calibration using planar calibration targets. Paper presented at Workshop of the Austrian Association for Pattern Recognition, Hagenberg, Austrian Association for Pattern Recognition.

Zubair, M., & Choudry, M. A. (2011). Land Mine Detecting Robot Capable of Path Planning. WSEAS Transactions on Systems and Control, 6(4), 105-114.

How to Cite

APA

ACM

ACS

ABNT

Chicago

Harvard

IEEE

MLA

Turabian

Vancouver

Download Citation

CrossRef Cited-by

1. Miha Drole, Petar Vracar, Ante Panjkota, Ivo Stancic, Josip Music, Igor Kononenko, Matjaz Kukar. (2015). Learning from depth sensor data using inductive logic programming. 2015 XXV International Conference on Information, Communication and Automation Technologies (ICAT). , p.1. https://doi.org/10.1109/ICAT.2015.7340498.

2. Ivo Stancic, Milos Brajovic, Irena Orovic, Josip Music. (2016). Compressive sensing for reconstruction of 3D point clouds in smart systems. 2016 24th International Conference on Software, Telecommunications and Computer Networks (SoftCOM). , p.1. https://doi.org/10.1109/SOFTCOM.2016.7772129.

Dimensions

PlumX

Article abstract page views

Downloads

License

Copyright (c) 2014 Ivo Stančić, Josip Music, Mojmil Cecic

This work is licensed under a Creative Commons Attribution 4.0 International License.

The authors or holders of the copyright for each article hereby confer exclusive, limited and free authorization on the Universidad Nacional de Colombia's journal Ingeniería e Investigación concerning the aforementioned article which, once it has been evaluated and approved, will be submitted for publication, in line with the following items:

1. The version which has been corrected according to the evaluators' suggestions will be remitted and it will be made clear whether the aforementioned article is an unedited document regarding which the rights to be authorized are held and total responsibility will be assumed by the authors for the content of the work being submitted to Ingeniería e Investigación, the Universidad Nacional de Colombia and third-parties;

2. The authorization conferred on the journal will come into force from the date on which it is included in the respective volume and issue of Ingeniería e Investigación in the Open Journal Systems and on the journal's main page (https://revistas.unal.edu.co/index.php/ingeinv), as well as in different databases and indices in which the publication is indexed;

3. The authors authorize the Universidad Nacional de Colombia's journal Ingeniería e Investigación to publish the document in whatever required format (printed, digital, electronic or whatsoever known or yet to be discovered form) and authorize Ingeniería e Investigación to include the work in any indices and/or search engines deemed necessary for promoting its diffusion;

4. The authors accept that such authorization is given free of charge and they, therefore, waive any right to receive remuneration from the publication, distribution, public communication and any use whatsoever referred to in the terms of this authorization.