Anxiety in Language Testing: The APTIS Case

Ansiedad en la evaluación de las lenguas: El caso APTIS

DOI:

https://doi.org/10.15446/profile.v19n_sup1.68575Keywords:

Anxiety, computers, foreign language, testing (en)ansiedad, ordenadores, evaluación de lenguas extranjeras, habilidad oral (es)

https://doi.org/10.15446/profile.v19n_sup1.68575

Anxiety in Language Testing: The APTIS Case1

Ansiedad en la evaluación de las lenguas: El caso APTIS

Jeannette de Fátima Valencia Robles*

Universidad de Alcalá, Alcalá de Henares, Spain

*jeannette.valencia@edu.uah.es

This article was received on September 11, 2017, and accepted on November 3, 2017.

How to cite this article (APA, 6th ed.):

Valencia Robles, J. (2017). Anxiety in language testing: The APTIS case. Profile: Issues in Teachers’ Professional Development, 19(Suppl. 1), 39-50. https://doi.org/10.15446/profile.v19n_sup1.68575.

This is an Open Access article distributed under the terms of the Creative Commons license Attribution-NonCommercial-NoDerivatives 4.0 International License. Consultation is possible at http://creativecommons.org/licenses/by-nc-nd/4.0/.

The requirement of holding a diploma which certifies proficiency level in a foreign language is constantly increasing in academic and working environments. Computer-based testing has become a prevailing tendency for these and other educational purposes. Each year large numbers of students take online language tests everywhere in the world. In fact, there is a tendency to use these tests more and more. However, many students might not feel comfortable when taking this type of exams. This paper describes a study regarding the fairly new APTIS Test (British Council). Thirty-one students took the test and responded to a structured online questionnaire on their feelings while taking it. Results indicate that the test brings a considerable amount of anxiety along with it.

Key words: Anxiety, computers, foreign language, testing.

El requisito de tener un diploma que certifique el nivel de competencia en una lengua extranjera está aumentando en entornos académicos y laborables. La evaluación por ordenadores se ha convertido en una tendencia que facilita la recolección y procesamiento de las respuestas. Cada año, un considerable grupo de estudiantes toma los exámenes online para evaluarse en un idioma. Sin embargo, muchos de ellos pueden no sentirse cómodos con este formato de evaluación. Este artículo describe un estudio con el relativamente nuevo APTIS Test del Consejo Británico. 31 estudiantes realizaron la prueba y respondieron un cuestionario online con preguntas abiertas sobre los sentimientos que tuvieron mientras se examinaban. Los resultados indican que la versión online provoca un considerable nivel de ansiedad.

Palabras clave: ansiedad, ordenadores, evaluación de lenguas extranjeras, habilidad oral

Introduction

Online computer testing has become very common in recent times (Chapelle & Voss, 2016; García-Laborda, 2007; Nash, 2015; Sapriati & Zuhairi, 2010; Shuey, 2002). Educational boards and administrations see in online testing a flexible (Boyles, 2011), fast, and efficient tool for educational measurement (Chang & Lu, 2010; Chapelle & Voss, 2016). Likewise, language tests have become a trending tendency in education due to the need to certify the candidates’ knowledge of a foreign language for jobs, immigration, and other purposes. Although these factors have led to the appearance of several exam formats and suites from the beginning of the 21st century, the use of online tests to evaluate the four language skills according to the Common European Framework of Reference for Languages (CEFR) or the American Council of the Teaching of Foreign Languages (ACTFL), is still in a relatively recent development. Over the past few years, many online tests have been developed for such purposes such as the IB TOEFL, BULATS, the Cambridge Suite (with their “for school” versions), GRE, and more (Chapelle & Voss, 2016; García-Laborda, 2007, 2009a; Roever, 2001; Stoynoff & Chapelle, 2005). Besides, there is a growing concern about the influence of computer-based tests (CBT) on students’ test performance (Dohl, 2012). Researchers need to know whether this not so innovative type of assessment reduces the common challenges candidates face when taking a language exam. Although there are many studies devoted to describing what test designers have to do to implement good computer tests (Fulcher, 2003; García-Laborda, 2007), limited attention has been devoted/channelled to discovering candidates’ test experiences and their opinions about how their speaking and written performance are affected in computer tests. CBT can deal with the large amounts of test-takers’ answers to present reliable results in a brief time. According to Bartram and Hambleton (2005) and Smith and Caputi (2007), the advantages of using CBT have influenced their popularity. For example, exams elaboration costs and test reporting times are reduced while test security is increased (García-Laborda, 2007). In addition, CBT store responses for automatic and precise analysis and measurement (García-Laborda, 2007). As a result, CBT are innovating the testing and language learning field.

Despite their being digital natives, many students, however, do not feel at ease when taking online tests. The kind of tasks candidates face during the exam might be one of the reasons to feel uncomfortable during online tests (Fritts & Marszalek, 2010). Language tests usually include reading, writing, listening, speaking, and grammar tasks. Nakatani (2006) highlights four constraints related to the speaking skill that are summarized in the following list:

- Speaking is a negotiated activity. It requires two or more interlocutors.

- Speaking is dynamic. Real life conversations are not structured and do not follow a pre-fixed order or pattern.

- Speaking usually requires the election of the interlocutor even when it is forced by outside impositions (such as undesired working interviews).

- Most conversations require common cultural and situational grounds and most times previous mutual knowledge.

Unfortunately, these conditions are hardly ever met in a face-to-face speaking task and they are totally absent in a computer test. Thus, strong feelings are likely to appear in students who take a computer-based oral exam. This lack of real communication which is forced by the testing situation usually leads to rejection and inconvenience.

The primary focus of this research was to determine the causes of students’ poor results in APTIS speaking tests by analysing participants’ thoughts about the test and their opinions about its influence on their oral performance. Even though it was believed students had experienced test anxiety, a self-created survey was used to establish anxiety levels. It consisted of an online forum with seven open-ended questions to allow test-takers to express their feelings in this regard. The results of this investigation provided more insight into the CBT experience and the need of test training and metalinguistic strategies development.

Literature Review

The main goal of English language tests is to assess whether test-takers are able to apply different skills to show communicative competence, which involves more than only the knowledge of grammar structures and lexical words, but also the use of strategies to face the unforeseen events of communication (Tuan & Mai, 2015). It has been reported that the speaking skill is the most challenging section of the language exams (Sayin, 2015). Previous research has demonstrated that it produces test anxiety (Çağatay, 2015; Gerwing, Rash, Allen Gerwing, Bramble, & Landine, 2015; Tóth, 2012; Tuan & Mai, 2015). As reported by Sayin (2015), test anxiety occurs when cognitive and emotional processes interfere with “competent performance in academic and assessment situations” (p. 113). Therefore, in order to guide English as a foreign language (EFL) learners to develop their oral skills and face a testing context, it is essential to identify the main challenges examinees usually deal with when taking oral exams (Çağatay, 2015; Tuan & Mai, 2015) and the effects on test-takers’ performances.

First of all, anxiety is not a new issue in the language learning field. Horwitz, Horwitz, and Cope (1986) recognized that EFL learners experience foreign language anxiety (FLA) as part of the complexity and “uniqueness of the learning process”. They explained that FLA is a type of specific anxiety reaction because some students only experience it in the learning and testing environment. Furthermore, anxiety is not always negative. Actually, there are two different types of anxiety that lead learners to perform well or not; the beneficial or facilitative anxiety that positively alerts and contributes to learners’ motivation, and the “inhibiting or debilitative anxiety” that frustrates students’ opportunities to show off their abilities (Dörnyei as cited in Çağatay, 2015; Sayin, 2015). It is in the later scenario where FLA indeed represents a problem because it makes EFL learners feel anxiety components such as fear of a negative evaluation, comprehension apprehension or shyness to interact, and test anxiety (Horwitz et al., 1986). The same feelings are part of oral English test anxiety (Han as cited in Shi, 2012).

The aforementioned FLA components are related to Tuan and Mai’s (2015) list of challenging aspects of the speaking skill during classes and examinations. These aspects can be classified into two categories. On the one hand, there are external factors or performance conditions that might provoke anxiety. These conditions are time pressure, planning time, standard of performance, and amount of support (Tuan & Mai, 2015). Anxiety levels vary depending on the task requirement and the available time students have to prepare their answers (O’Sullivan, 2008), as well as the assistance, feedback, and interactions they receive during the exercise (Tuan & Mai, 2015).

On the other hand, text anxiety can also arise due to three internal factors present in test-takers: the listening ability, speaking-related problems, and affective factors (Tuan & Mai, 2015; Ur, 1996). The listening ability plays an important role in communicative tasks as participants need to understand the spoken message in order to react to it (O’Sullivan, 2008). Thus, being able to interact in speaking tasks depends on the speakers’ skills to perceive, hear, and decode inputs from conversations (Ur, 1996). Another challenging factor concerns speaking-related problems. Ur (1996) mentions that inhibition to talk, lack of ideas, and practice usually cause students not to speak during classes and exams. Finally, there are affective factors like motivation, self-concept, and anxiety itself that lead students to have a helpless attitude towards the speaking skill.

Bearing in mind these challenges facilitates understanding of what EFL learners undergo while facing speaking tasks during exams. O’Sullivan (2008) highlights the different considerations test-developers think of when designing reliable tests. For instance, they try to address the common characteristics of test-takers to create fair examinations. Although anxiety does not appear among the psychological features, motivation, affective schemata, and emotional state do. Another element considered by test designers is the cognitive process and cognitive resources examinees are supposed to follow and use to succeed provided that anxiety does not cause cognitive interference during the development of their responses (Sarason, 1984). These aspects facilitate the elaboration of reliable tests.

CBTs aim to improve the testing experience so candidates can perform better. In speaking tests with this format, examinees interact with the test software by wearing headsets and speaking into a microphone (Shi, 2012). The role of the computer is to present instructions and tasks, control the preparation and response time, and store candidates’ responses (Shi, 2012). In spite of these facilities, several publications have appeared in recent years documenting that CBTs are also creating test anxiety and computer anxiety among test-takers (Grubb, 2013; Sayin, 2015; Shi, 2012).

Shi (2012) found that there are some subjective and objective factors that cause test anxiety in computer-based oral English tests. The questionnaires’ results showed that the subjective causes were discomfort when talking to a computer, lack of time management skills, and previous negative testing experiences. On the other hand, more objective causes were the noise in the multimedia lab, the lack of interaction, and the difficulty and complexity of the topics (Shi, 2012).

In a different study Grubb (2013) sought to determine if there was a variation in anxiety levels between CBT and traditional paper and pencil assessments. Although students were solving math problems, this case addressed the effect of CBT on anxiety levels. Test performance considerably varied between both groups; revealing that CBT did not lessen the anxiety levels in students. Actually, students who were assessed with a computer performed worse. Grubb concludes that students might need to get familiarized with the new test modality in order to see significant differences.

Finally, Sayin (2015) conducted a study to learn about students’ attitudes towards CBT after a five-week test training period. Moreover, he wanted to determine the reasons of test anxiety in the exam participants took during the study. The results demonstrated that timing was the main cause of test anxiety.

Working with CBT is becoming a dominant element in the language testing field of the 21st Century. Several research projects are being carried out to establish parameters for reliable interfaces that enable learners to interact appropriately during the testing experience (Chen, 2014; Dina & Ciornei, 2013; García-Laborda, 2009a; García-Laborda, Magal-Royo, de Siqueira Rocha, & Fernández Álvarez, 2010). That is why this study aims to contribute to the development of the state of the art in relation to the influence of computer-based oral English tests on test-takers’ speaking performance.

Method

This qualitative study followed an exploratory-interpretive paradigm to understand participants’ perceptions of the APTIS speaking test and to explore their awareness of what caused their poor performance in that examination. Furthermore, this pilot study sought to identify students’ readiness to present suggestions of improvement.

Participants

Thirty-one students, 23 females and 8 males, with an age range of 17-21 years old of the teaching career in Universidad de Alcalá (Guadalajara, Spain) in 2014-2015 took the APTIS test. They were the first group of students taking the test in Guadalajara, Spain. Hence, they were not familiarized with its format nor had they received any test-training. However, the group managed to get good results in the reading, writing, and listening sections. It was the speaking section that represented a problem. During the analysis of data, the word “Candidate” plus the number of response appearing was used to protect participants’ identity.

Instrument of Data Collection

An online forum in the Blackboard platform was designed as a self-developed instrument to collect information related to participants’ feelings and thoughts towards the computer-based oral test they took. The seven open-ended questions were based on three main themes: familiarity with, ergonomics of, and feelings towards the APTIS speaking test. The questions were designed in English and then translated into participants’ mother tongue, Spanish. The questions are included in the Appendix section, and are presented in English for publication purposes. This online forum aimed to provide data to establish any correlation between the different factors that made participants feel uncomfortable during the exam with the results of previous studies.

Ethical Considerations

Students were told to participate in the academic forum as part of the course. Although students’ names remain confidential, the questions were visible in the forum of the Blackboard of the university and participants were allowed to see each other’s entries as they belonged to the same course.

Data Collection and Analysis Procedures

After having received the test results, the 31 participants were required to submit their answers to find out the causes that prevented them from performing well in the speaking section. Thirty-one entries to the academic forum were collected and printed for analysis. As usually happens in forums discussions, almost all participants decided to provide their opinion without including the questions or following their order, though all the answers are related to the themes of the open-ended questions. Only one entry was discarded as its author had not taken the APTIS speaking exam.

On the basis of the literature review, data were organized and coded by identifying the different anxiety factors based on students’ descriptions of their opinions about and experiences with the APTIS speaking test. For the purpose of this study some general descriptions were assigned particular labels to facilitate the coding and further analysis. For instance, explanations of problems with the test software, program distracting sounds, internet connections, screens turning black, headphones, and microphone were given the code technical issues. Furthermore, participants’ comments on how difficult it was to understand and use the program itself were named as interface experience. Similar responses were classified in the same category or anxiety factor in order to establish the number of participants who reported the same test anxiety cause. Those factors were grouped by themes for further analysis. The information was then tabulated using different spread sheets and formulas of Microsoft Excel for the development of the Figures related to each research question.

The Research Questions

Five questions were considered during the process:

- What were participants’ opinions towards the APTIS test?

- What were the causes of students’ poor performance in the APTIS speaking test?

- Are those causes similar to the ones reported in previous studies?

- Did any participant establish a relationship between their main sources of anxiety and their poor test performances?

- Did any participant make suggestions to improve the APTIS speaking test?

Results

The results are organized according to the research questions and the main themes identified during the analysis. Figures were generated after arranging and tabulating data.

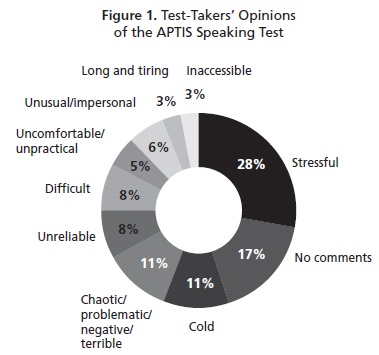

Figure 1 shows participants’ opinions about the APTIS test. Although they gave comments on the whole test, only the opinions related to the speaking section were included in the figure.

As can be seen in Figure 1, only 17% of the test-takers did not give any comments on the exam while the other 83% of the candidates used several adjectives to describe the test. For example, some students argued the exam was “inaccessible” (3%), “long and tiring” (3%). Another 6% of the participants pointed out that, in general, talking to a computer was an “unusual and impersonal experience”. Furthermore, 11% of the students claimed the speaking section was “a chaotic and terrible situation” because they faced some problems that made them get negative results. Actually, 28% of the participants clearly labelled the APTIS test as “a stressing exam”, which is related to previous studies conclusions. The causes that provoke these opinions are depicted in Figure 2.

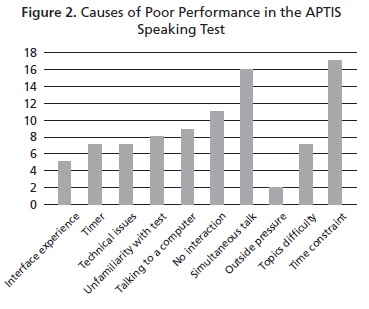

Figure 2 illustrates the anxiety factors mentioned by the participants during the academic online forum. Time constraints and background noise caused by simultaneous talk were the most common causes of distress and anxiety (19% and 18%, respectively) while outside pressure like depending on the test results to study a university career was the least common cause of anxiety (2%). However, in order to interpret the results in the framework of the literature review, four factors will be considered: the subjective and objective factors (Shi, 2012) and the external and internal factors (Tuan & Mai, 2015) of test anxiety in computer-based oral exams.

Regarding the subjective reasons of test anxiety identified by Shi (2012), it was also found that external factors contributed to the increase of anxiety. For example, talking to a computer and unfamiliarity with the test format are external factors that received 10% and 9%, respectively. A few participants claimed that they became “stressed” and “uncomfortable” when they realised they would have to talk to a “machine”. In addition, responses revealed students got “nervous” because they were not familiarized with the format and procedure of the CBT. This reveals that the digital competence is an important issue that needs to be highlighted (Shi, 2012) since it seems that it has not been totally achieved by test-takers.

Similar results were obtained with time constraint because it was the main isolated cause of anxiety, with 19%, as in Shi’s (2012) and Sayin’s (2015) studies. Participants described the available time to observe pictures, reflect, organize, and deliver ideas as “insufficient”. Moreover, students reported that the visual timer increased their nervousness. Some complained the countdown timer made them feel “really nervous” and “anxious” and they even became “obsessive about looking at it” with distress. As a result, these data reveal that test-takers need to work on metacognitive and time management skills to organize and prepare responses. These skills can help students deal with time pressure and the planning time, which are conditions that affect the speaking performance (Tuan & Mai, 2015). Although there was no answer related to previous negative experiences, some participants (2%) reported worries about how the results could affect their future professional development. For instance, they claimed they needed to pass or score well on the aptis test in order to “pursue a career” (Candidate 3) or to “be admitted in the English mention” of their careers (Candidate 5).

Regarding the objective reasons to experience test anxiety, students described external and internal factors that influenced their performance negatively (Tuan & Mai, 2015). Once again, the study reflects what Shi (2012) has mentioned about the number of reports related to noise in the multimedia lab. Participants identified the logistics problem of taking a test in a room where “15 people were doing speaking exercises at the same time” (Candidate 3). In their opinion, “it was really difficult to concentrate” because they could “hear each other conversations” (Candidates 1, 3, 4, 8, 10, 11, 12, 14, 16, 18, 20, 22, 23, 24, 27, 30). As a result, 19% of the participants claimed those simultaneous talks caused anxiety in them since they found it hard to express themselves “clearly” in an exam that some identified as “chaotic”. According to the descriptions provided, this external factor could have diminished test-takers’ listening abilities to understand what they were required to do in the speaking section, which might have directly affected their speaking skills.

Additionally, 11% of the test-takers assured that the lack of interaction, feedback, and support made it difficult to perform well during the task. Students expressed they were not confident to talk to a computer because they needed to rely on “human gestures”, “non-verbal communication”, and “body language”. Eight per cent of the examinees also argued that the topics difficulty did not allow them to develop their ideas and “show off [their] knowledge and skills” (Candidate 23). In their views, topics were so “unknown”, “complex”, and “unusual” that they would not even be able to discuss them in their “mother tongue”, Spanish. This result is similar to Shi’s (2012) findings about topic complexity. Young (as cited in Shi, 2012) discovered that learners’ anxiety levels increased in relation to the ambiguity and difficulty of the test exercises and formats.

Participants also addressed other causes that had not been mentioned in previous studies such as technical issues and interface navigability. Eight per cent of the participants reported concrete cases where they experienced test anxiety due to inconsistencies with internet connection, software, or hardware. For example, some participants had to move to another computer if they had lost Internet connection or if “the speaking test program did not worked properly on theirs” (Candidate 3). They also complained about the efficiency of their headphones, but it is necessary to establish if this is related to the simultaneous talk in the room. In addition, only 6% of the examinees said the interface of the program was a leading-factor of anxiety since they had to “take some time to really understand how to use it” (Candidate 27) or they tended to get distracted by a “sound” that indicated the start and end of each activity.

These descriptions of candidates’ perceptions of the test experience were used to answer the third question that aimed to explore how many participants were able to relate those factors to test anxiety. Figure 3 shows that 50% of the participants recognized they were anxious during the test while 23% did not mention anything about the causes of their low performance. Surprisingly, 27% claimed not to have experienced anxiety at all, but these data are not consistent with their descriptions. The different adjectives they used to describe their feelings towards the test, and the test itself, can be observed in Figure 1.

The results of the fourth question show the number of participants who were able to relate their test anxiety to their poor test performance. Seventy-seven per cent of the students focused more on describing their experience than referring to their test performance as the issue had not been explicitly included in the questions of the forum. Nevertheless, the students who did make connections between these two variables highlighted that their sources of anxiety, such as simultaneous talk, talking to a computer, unfamiliarity with the test, and interface navigability, caused their poor results.

For example, they concluded that the simultaneous talk affected their oral skills and did not allow them to “demonstrate what [they] really know” (Candidate 23). Others claimed that “talking to the computer could negatively influence on the results” (Candidate 6). It was also addressed that if they had known the speaking test procedure, they “would have got a better result”. Furthermore, students argued that the presentation of the different exercises was “distracting” and “not well-structured” (Candidate 25). These ideas made them think the test was not “reliable”.

The last research question was concerned with participants’ initiative to propose suggestions to improve the APTIS test. Despite not having being explicitly asked, participants could have taken advantage of the forum to share their opinions in this regard; especially in the first, fourth, fifth, and sixth questions. Nonetheless, only 27% of the examinees mentioned suggestions so that future test-takers could perform better in “a calmer” and more real-life environment. In their opinions, the test program should allow more time to “reflect and organize ideas” before requiring participants to give an answer, and a “pause button” or a “recording interface” could be implemented. In addition, some candidates think, in the future, the simultaneous talk factor could be avoided by not including 15 people in the same room, but “taking the test in pairs” (Candidate 22) or by “speaking to another candidate through webcams” (Candidate 14).

Conclusions

The present study focused on exploring the causes of test-takers’ low oral performance in the speaking section of the APTIS test. It also analysed candidates’ opinions about the speaking exam and their thoughts of whether it caused them anxiety or not. From the research that has been carried out it is possible to conclude these participants experienced test anxiety during the examination of their oral skills. The results give insights for the development of more concrete questions to be used as part of a larger formal test anxiety inventory (currently in development). A secondary issue could be the relevance for teacher and teacher training where the knowledge of testing applications is extremely relevant for the washback effect (García-Laborda & Magal-Royo, 2009), especially through alternative computer applications (García-Laborda, 2009b). In addition, further investigations could be conducted to determine concrete test anxiety levels in test-takers of computer-based oral English tests and the extent to which that test anxiety influences test performance. Paying attention to the main causes of test anxiety reported in the studies will improve the basis of computer-based performance.

The study presents useful information for teacher-trainers and people in charge of providing the CBT service as there are aspects to consider for improving both students’ exam preparation lessons and the testing experience. In light of the results, teachers need to create opportunities to allow students to get familiarized with the test format and the test requirements. Future test-takers will need this to get used to the APTIS test, and any other CBT, and to be prepared to face a wide range of topics. It is also of great importance that test-training courses offer practice in order to develop time management skills and metalinguistic skills so that examinees can organize ideas and evaluate the appropriateness of using them as responses in the given time.

Regarding the improvement of the testing experience, it has been a major find that there are technical and logistic issues that might need consideration for further research. For example, it is essential to guarantee test-takers that the centre or computer lab will have high-quality internet connection and software and hardware facilities. Furthermore, researchers need to bear in mind to what extent noise in the multimedia lab, without room for repetition, clarification, and rephrasing, resembles real-life communication. Finally, it is essential to identify whether these conditions provoke cognitive interference or the diminishment of effective cognitive and communicative process.

By and large, the findings of this pilot study would enable the researchers to design further investigations on how to improve computer-based oral English tests so that test-takers can be capable of managing anxiety and demonstrating their communicative skills in a more valid and reliable testing environment.

1A previous abstract version of this paper was presented at the WCES and Linelt conferences in 2016 and 2017 by the same author.

References

Bartram, D., & Hambleton, R. K. (Eds.). (2005). Computer-based testing and the internet: Issues and advances. Chichester, UK: John Wiley & Sons. https://doi.org/10.1002/9780470712993.

Boyles, P. C. (2011). Maximizing learning using online student assessment. Online Journal of Distance Learning Administration, 14(3), Retrieved from http://www.westga.edu/~distance/ojdla/fall143/boyles143.html.

Çağatay, S. (2015). Examining EFL students’ foreign language speaking anxiety: The case at a Turkish state university. Procedia: Social and Behavioral Sciences, 199(3), 648-656. https://doi.org/10.1016/j.sbspro.2015.07.594.

Chang, Y.-C., & Lu, H.-Y. (2010). Online calibration via variable length computerized adaptive testing. Psychometrika, 75(1), 140-157. https://doi.org/10.1007/s11336-009-9133-0.

Chapelle, C. A., & Voss, E. (2016). 20 years of technology and language assessment in language learning & technology. Language Learning & Technology. Language Learning & Technology, 20(2), 116-128.

Chen, H. (2014). A Proposal on the validation model of equivalence between PBLT and CBLT. Journal of Education and Learning, 3(4), 17-25. https://doi.org/10.5539/jel.v3n4p17.

Dina, A.-T., & Ciornei, S.-I. (2013). The advantages and disadvantages of computer assisted language learning and teaching for foreign languages. Procedia: Social and Behavioral Sciences, 76, 248-252. https://doi.org/10.1016/j.sbspro.2013.04.107.

Dohl, C. (2012). Foreign language student anxiety and expected testing method: Face-to-face versus computer mediated testing [Doctoral dissertation]. University of Nevada, Reno, USA.

Fritts, B. E., & Marszalek, J. M. (2010). Computerized adaptive testing, anxiety levels, and gender differences. Social Psychology of Education: An International Journal, 13(3), 441-458. https://doi.org/10.1007/s11218-010-9113-3.

Fulcher, G. (2003). Interface design in computer-based language testing. Language testing, 20(4), 384-408. https://doi.org/10.1191/0265532203lt265oa.

García-Laborda, J. (2007). On the net: Introducing standardized EFL/ESL exams. Language Learning & Technology, 11(2), 3-9.

García-Laborda, J. (2009a). Interface architecture for testing in foreign language education. Procedia: Social and Behavioral Sciences, 1(1), 2754-2757. https://doi.org/10.1016/j.sbspro.2009.01.488.

García-Laborda, J. (2009b). Review of the book Tips for teaching with CALL: Practical approaches to computer-assisted language learning, by C. A. Chapelle & J. Jamieson. Language Learning & Technology, 13(2), 15-21.

García-Laborda, J., & Magal-Royo, T. (2009). Training senior teachers in compulsory computer based language tests. Procedia: Social and Behavioral Sciences, 1(1), 141-144. https://doi.org/10.1016/j.sbspro.2009.01.026.

García-Laborda, J., Magal-Royo, T., de Siqueira Rocha, J. M., & Fernández Álvarez, M. (2010). Ergonomics factors in English as a foreign language testing: The case of PLEVALEX. Computers & Education, 54(2), 384-391. https://doi.org/10.1016/j.compedu.2009.08.021.

Gerwing, T. G., Rash, J. A., Allen Gerwing, A. M., Bramble, B., & Landine, J. (2015). Perceptions and incidence of test anxiety. The Canadian Journal for the Scholarship of Teaching and Learning, 6(3). https://doi.org/10.5206/cjsotl-rcacea.2015.3.3.

Grubb, G. (2013). Does the use of computer-based assessments produce less test anxiety symptoms than traditional paper and pencil assessment? (Master’s thesis). Ohio: Ohio University, Athens, USA. Retrieved from https://www.ohio.edu/education/academic-programs/upload/Grubb_MasterResearchProject.pdf.

Horwitz, E. K., Horwitz, M. B., & Cope, J. (1986). Foreign language classroom anxiety. The Modern Language Journal, 70(2), 125-132. https://doi.org/10.1111/j.1540-4781.1986.tb05256.x.

Nakatani, Y. (2006). Developing an oral communication strategy inventory. Modern Language Journal, 90(2), 151-168. https://doi.org/10.1111/j.1540-4781.2006.00390.x.

Nash, J. A. (2015). Future of online education in crisis: A call to action. TOJET: The Turkish Online Journal of Educational Technology, 14(2), 80-88.

O’Sullivan, B. (2008). Notes on assessing speaking. Retrieved from http://www.lrc.cornell.edu/events/past/2008-2009/papers08/osull1.pdf.

Roever, C. (2001). Web-based language testing. Language Learning & Technology, 5(2), 84-94.

Sapriati, A., & Zuhairi, A. (2010). Using computer-based testing as alternative assessment method of student learning in distance education. Turkish Online Journal of Distance Education, 11(2), 161-169.

Sarason, I. G. (1984). Stress, anxiety and cognitive interference: Reactions to tests. Journal of Personality and Social Psychology, 46(4), 929-938. https://doi.org/10.1037/0022-3514.46.4.929.

Sayin, B. A. (2015). Exploring anxiety in speaking exams and how it affects students’ performance. International Journal of Education and Social Science, 2(12), 112-118.

Shi, F. (2012). Exploring students’ anxiety in computer-based oral English test. Journal of Language Teaching and Research, 3(3), 446-451. https://doi.org/10.4304/jltr.3.3.446-451.

Shuey, S. (2002). Assessing online learning in higher education. Journal of Instruction Delivery Systems, 16(2), 13-18.

Smith, B., & Caputi, P. (2007). Cognitive interference model of computer anxiety: Implications for computer-based assessment. Computers in Human Behavior, 23(3), 1481-1498. https://doi.org/10.1016/j.chb.2005.07.001.

Stoynoff, S., & Chapelle, C. A. (2005). ESOL tests and testing: A resource for teachers and program administrators. Alexandria, US: TESOL Publications.

Tóth, Z. (2012). Foreign language anxiety and oral performance: Differences between high- vs. low-anxious EFL students. US-China Foreign Language, 10(5), 1166-1178.

Tuan, N. H., & Mai, T. N. (2015). Factors affecting students’ performance at Le Thanh Hien high school. Asian Journal of Educational Research, 3(2), 8-23.

Ur, P. (1996). A course in language teaching: Practice and theory. Cambridge, UK: Cambridge University Press.

About the Author

Jeannette de Fátima Valencia Robles is a second-year doctoral student of Modern Languages at Universidad de Alcalá, Spain. She received a bachelor’s degree in English language from Universidad Católica de Santiago de Guayaquil, in Ecuador, and a master’s degree in teaching English as a foreign language from Universidad de Alcalá. She is interested in EFL and ESL education, adolescent development, and technology.

Appendix: Academic Forum Questions

- What is your general opinion about the exam?

- In your opinion, does it reflect your knowledge of the English language accurately?

- If you already have a level test, do test results correspond?

- Does talking to a computer give you anxiety? Do you prefer to talk to a person? Is a minute enough time to prepare the answer for the longer topic?

- Did you find it easy to use the test software? Was it user-friendly and intuitive?

- What were the main difficulties you found?

- What advantages does this exam have?

References

Bartram, D., & Hambleton, R. K. (Eds.). (2005). Computer-based testing and the internet: Issues and advances. Chichester, UK: John Wiley & Sons. https://doi.org/10.1002/9780470712993.

Boyles, P. C. (2011). Maximizing learning using online student assessment. Online Journal of Distance Learning Administration, 14(3), Retrieved from http://www.westga.edu/~distance/ojdla/fall143/boyles143.html.

Çagatay, S. (2015). Examining EFL students’ foreign language speaking anxiety: The case at a Turkish state university. Procedia: Social and Behavioral Sciences, 199(3), 648-656. https://doi.org/10.1016/j.sbspro.2015.07.594.

Chang, Y.-C., & Lu, H.-Y. (2010). Online calibration via variable length computerized adaptive testing. Psychometrika, 75(1), 140-157. https://doi.org/10.1007/s11336-009-9133-0.

Chapelle, C. A., & Voss, E. (2016). 20 years of technology and language assessment in language learning & technology. Language Learning & Technology. Language Learning & Technology, 20(2), 116-128.

Chen, H. (2014). A Proposal on the validation model of equivalence between PBLT and CBLT. Journal of Education and Learning, 3(4), 17-25. http://dx.doi.org/10.5539/jel.v3n4p17.

Dina, A.-T., & Ciornei, S.-I. (2013). The advantages and disadvantages of computer assisted language learning and teaching for foreign languages. Procedia: Social and Behavioral Sciences, 76, 248-252. https://doi.org/10.1016/j.sbspro.2013.04.107.

Dohl, C. (2012). Foreign language student anxiety and expected testing method: Face-to-face versus computer mediated testing [Doctoral dissertation]. University of Nevada, Reno, USA.

Fritts, B. E., & Marszalek, J. M. (2010). Computerized adaptive testing, anxiety levels, and gender differences. Social Psychology of Education: An International Journal, 13(3), 441-458. https://doi.org/10.1007/s11218-010-9113-3.

Fulcher, G. (2003). Interface design in computer-based language testing. Language testing, 20(4), 384-408. https://doi.org/10.1191/0265532203lt265oa.

García-Laborda, J. (2007). On the net: Introducing standardized EFL/ESL exams. Language Learning & Technology, 11(2), 3-9.

García-Laborda, J. (2009a). Interface architecture for testing in foreign language education. Procedia: Social and Behavioral Sciences, 1(1), 2754-2757. https://doi.org/10.1016/j.sbspro.2009.01.488.

García-Laborda, J. (2009b). Review of the book Tips for teaching with CALL: Practical approaches to computer-assisted language learning, by C. A. Chapelle & J. Jamieson. Language Learning & Technology, 13(2), 15-21.

García-Laborda, J., & Magal-Royo, T. (2009). Training senior teachers in compulsory computer based language tests. Procedia: Social and Behavioral Sciences, 1(1), 141-144. https://doi.org/10.1016/j.sbspro.2009.01.026.

García-Laborda, J., Magal-Royo, T., de Siqueira Rocha, J. M., & Fernández Álvarez, M. (2010). Ergonomics factors in English as a foreign language testing: The case of PLEVALEX. Computers & Education, 54(2), 384-391. https://doi.org/10.1016/j.compedu.2009.08.021.

Gerwing, T. G., Rash, J. A., Allen Gerwing, A. M., Bramble, B., & Landine, J. (2015). Perceptions and incidence of test anxiety. The Canadian Journal for the Scholarship of Teaching and Learning, 6(3). http://dx.doi.org/10.5206/cjsotl-rcacea.2015.3.3.

Grubb, G. (2013). Does the use of computer-based assessments produce less test anxiety symptoms than traditional paper and pencil assessment? (Master’s thesis). Ohio: Ohio University, Athens, USA. Retrieved from https://www.ohio.edu/education/academic-programs/upload/Grubb_MasterResearchProject.pdf.

Horwitz, E. K., Horwitz, M. B., & Cope, J. (1986). Foreign language classroom anxiety. The Modern Language Journal, 70(2), 125-132. https://doi.org/10.1111/j.1540-4781.1986.tb05256.x.

Nakatani, Y. (2006). Developing an oral communication strategy inventory. Modern Language Journal, 90(2), 151-168. https://doi.org/10.1111/j.1540-4781.2006.00390.x.

Nash, J. A. (2015). Future of online education in crisis: A call to action. TOJET: The Turkish Online Journal of Educational Technology, 14(2), 80-88.

O’Sullivan, B. (2008). Notes on assessing speaking. Retrieved from http://www.lrc.cornell.edu/events/past/2008-2009/papers08/osull1.pdf.

Roever, C. (2001). Web-based language testing. Language Learning & Technology, 5(2), 84-94.

Sapriati, A., & Zuhairi, A. (2010). Using computer-based testing as alternative assessment method of student learning in distance education. Turkish Online Journal of Distance Education, 11(2), 161-169.

Sarason, I. G. (1984). Stress, anxiety and cognitive interference: Reactions to tests. Journal of Personality and Social Psychology, 46(4), 929-938. https://doi.org/10.1037/0022-3514.46.4.929.

Sayin, B. A. (2015). Exploring anxiety in speaking exams and how it affects students’ performance. International Journal of Education and Social Science, 2(12), 112-118.

Shi, F. (2012). Exploring students’ anxiety in computer-based oral English test. Journal of Language Teaching and Research, 3(3), 446-451. https://doi.org/10.4304/jltr.3.3.446-451.

Shuey, S. (2002). Assessing online learning in higher education. Journal of Instruction Delivery Systems, 16(2), 13-18.

Smith, B., & Caputi, P. (2007). Cognitive interference model of computer anxiety: Implications for computer-based assessment. Computers in Human Behavior, 23(3), 1481-1498. https://doi.org/10.1016/j.chb.2005.07.001.

Stoynoff, S., & Chapelle, C. A. (2005). ESOL tests and testing: A resource for teachers and program administrators. Alexandria, US: TESOL Publications.

Tóth, Z. (2012). Foreign language anxiety and oral performance: Differences between high- vs. low-anxious EFL students. US-China Foreign Language, 10(5), 1166-1178.

Tuan, N. H., & Mai, T. N. (2015). Factors affecting students’ performance at Le Thanh Hien high school. Asian Journal of Educational Research, 3(2), 8-23.

Ur, P. (1996). A course in language teaching: Practice and theory. Cambridge, UK: Cambridge University Press.

How to Cite

APA

ACM

ACS

ABNT

Chicago

Harvard

IEEE

MLA

Turabian

Vancouver

Download Citation

CrossRef Cited-by

1. Robert Weis, Esther L. Beauchemin. (2020). Are separate room test accommodations effective for college students with disabilities?. Assessment & Evaluation in Higher Education, 45(5), p.794. https://doi.org/10.1080/02602938.2019.1702922.

Dimensions

PlumX

Article abstract page views

Downloads

License

Copyright (c) 2017 Profile: Issues in Teachers' Professional Development

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

You are authorized to copy and redistribute the material in any medium or format as long as you give appropriate credit to the authors of the articles and to Profile: Issues in Teachers' Professional Development as original source of publication. The use of the material for commercial purposes is not allowed. If you remix, transform, or build upon the material, you may not distribute the modified material.

Authors retain the intellectual property of their manuscripts with the following restriction: first publication is granted to Profile: Issues in Teachers' Professional Development.